‘Dad’s subwoofer’, an electric walking cane, and, is that lighting!?

- Wits University

4th year engineering students show off their bold and visionary solutions with amazing final-year projects.

The School of Electrical and Information Engineering (EIE) this year celebrates the largest group of 4th-year students the School has ever had, a total of 243 students.

Speaking at the School’s annual EIE Open Day, Head of School, Professor Estelle Trengove, said it is a special year as this cohort of students is not only the largest group ever but will be graduating in the year Wits is celebrating its centenary.

“It’s been a joyful year to be a Witsie and I hope it has made your year even more special,” she said in welcoming the students online to Open Day.

Every year 4th-year students gather for Open Day to showcase their capstone projects for the year. “This is the crowning glory of all the hard work they put in,” Trengove said. Projects are identified by academic staff and students, and each pair of students work together to find solutions to unique complex engineering problems that have a direct impact on everyday challenges.

This year Open Day was held for the second time on the Gather Town platform – a virtual, interactive, custom 2D-world. Following the welcoming address in the ‘main conference hall’, participants and visitors steered their avatars and ‘walked’ around the exhibition halls in the ‘EIE Gather Town’ to engage with students about their projects in real-time using the video chat function. Students were also able to display their project posters, videos and other virtual content during their presentation.

Africa’s youth and young entrepreneurs are the future job creators of the country and the best hope to tackle joblessness, and this year’s innovative projects show the creativity, resourcefulness and ingenuity of Wits students to come up with bold and visionary solutions.

Using ‘Dad’s subwoofer’ to detect underwater earthquakes and volcanos

Vera Fourie, an electrical engineering student, partnered with Mandla Masogo, an information engineering student, for their project titled: Underwater seismic event detection using visible light.

They set out to determine if visual light disturbances in water can detect whether an underwater seismic event, such as a volcano or earthquake, has happened or not.

But the scale of such a real-life test would be an oceanic problem to overcome. Instead, Fourie’s lightbulb moment came when she remembers how subwoofer speakers were used in MTV music videos from the early 2000s to ‘blow the girl's hair out’.

“Our project is conceptual because we had to build a very small testbed to work with. We used a water tank with a laser and photoresistor to sense whether there are any disturbances in the water,” she explained.

To create the level of disturbance needed for their measurements, though, would have meant they had to buy a very expensive high-speed linear actuator. “Cost considerations played an important role in our project. Seismic events typically have low frequencies and because a subwoofer has very low frequencies, I modified my Dad’s subwoofer to test if it could mimic the frequencies of an underwater earthquake event,” Fourie said.

To create a point of reference from which they can mimic an actual seismic event on a small scale, the team compared their seismic charts with those from actual event charts of the Japan quake in 2015, and the 2004 Thailand earthquake and tsunami, and events in Hawaii. And it worked.

Masoga was up next and with ‘a lot of coding’ wrote machine learning algorithms to analyse the actual data that was transmitted that could then classify an event as seismic or not.

“The light transmitter transmits data binary and then this data will go through the tank and to the light receiver, and as the disturbances (from the subwoofer) happen, the data would get distorted.

“We then take that data, capture it and extract different characteristics from the data, such as the mean, the mode, median, the variant, and then we put the data through the classification algorithms to determine if it was a seismic event,” he explained.

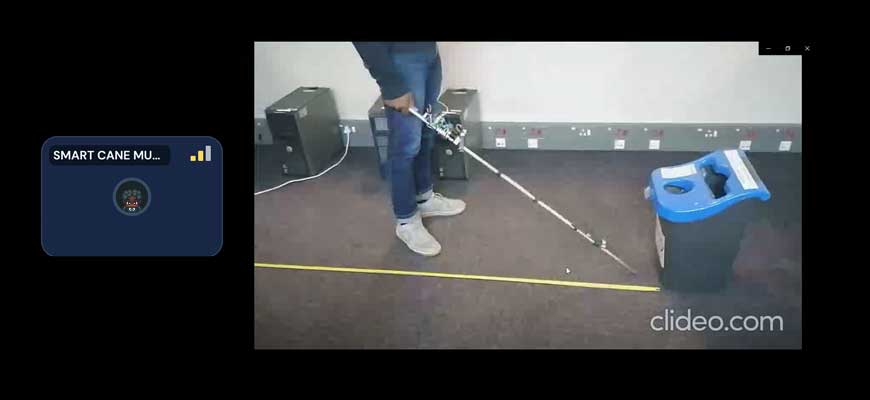

An electronically enabled cane for the visually impaired

With his project, Mukundi Mushiana investigated, designed, built and tested an electronically enabled walking cane that can provide real-time feedback to visually impaired people to help them navigate their surroundings.

“As a biomedical engineering student, I chose this project because it made me think about different aspects – not only from an electrical engineering point of view but I also had to research how visually impaired individuals live their everyday lives,” Mushiana said.

He explained how, traditionally, visually impaired people use a white cane to tap objects to get a ‘feel’ for what kind of object it is and where it is in relation to them.

“With my electronically enabled cane, an individual does not have to tap the object. The cane uses an echo-location application where an ultrasonic signal is sent from the cane and then bounces off the obstacle and echoes back to the device,” Mushiana explained.

Using the time between the signal sent out and received back, the device calculates the distance to indicate how far a person is from that obstacle in order for them to proactively make a decision to avoid the obstacle.

The device uses an ultrasonic sensor as well as an accelerometer that measures the acceleration of an object, and a gyroscope that measures the angular motion of an object.

“Normally the latter two sensors are used in aeroplanes. In this case, they can determine if a person has fallen by detecting that the person’s acceleration and angle have changed. This information then enables the device to raise an alarm which is audible to people nearby, as well as sends a SMS-alert with GPS coordinates to the cellphones of the next-of-kin who can then come to assist or direct help to the individual,” he said.

Automatic camera triggering system to capture lightning events

Wits has a rich history of world-class lightning research conducted through its Johannesburg Lightning Research Laboratory based in the School of Electrical and Information Engineering. The study of lightning is also crucial in saving lives in South Africa as Johannesburg has a high flash density for a country’s main economic centre.

With their 4th year project, Sipho Xaba and Londiwe Luvungo aimed to make the video capture of a lightning event more efficient.

“If you want to capture lightning events, your camera must run 24/7, which means the amount of video footage that is taken requires huge amounts of storage. We are devising an automatic triggering system for high-speed cameras. The camera is on standby and when a lightning event occurs, it is triggered to capture the flash on video but only stores the video if it is identified as a lightning event, after which the camera switches off again,” the team explained.

They embedded a central processing unit (CPU) with three algorithms that can detect the lightning flashes and identify if the event is actual lightning or just or another object, like an aeroplane passing by.

“So we can mitigate those false triggers. If it does detect an actual lightning event, only then will it store that video on the database, and if it is a false trigger, it won’t store the video,” they said.